FUNDED BY GACR CR, 2021-2024

About the project

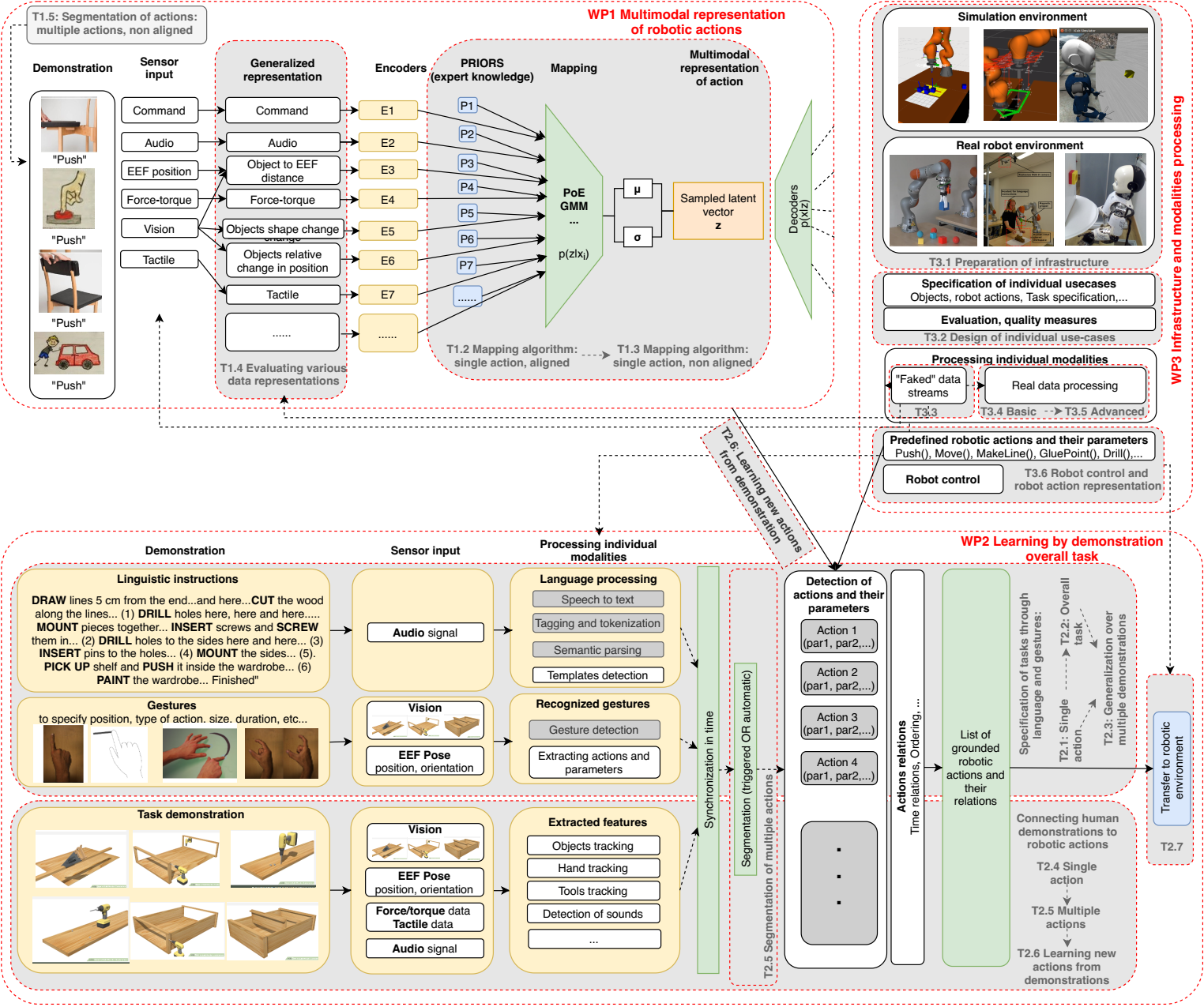

In most robotic applications, robot actions (such as pick, place, push, touch, mount, etc.) are programs created by expert roboticists. In the face of the uncertainties and flexibility need of the real world, these approaches often lack the required robustness. When we consider how humans solve dexterous manipulation tasks, we observe that many modalities (vision, proprioception, touch, etc.) are combined to form a robust reactive procedure for manipulating and navigating our surroundings. In this project, we will design a mapping algorithm that will enable us to create such a multimodal representation of actions that incorporates prior knowledge about the uncertainty of different domains, and show how this can be used to ease teaching robotic actions and generalize them to new embodiments (e.g. different robots, grippers, etc.) and environment. Finally, we will apply the results to learning by demonstration.

Aims of the project

Explore various possible representations of input sensor data and design task independent multimodal representation of actions combining visual, linguistic, tactile and motor domain to enable easier transfer to new environment and embodiment as well as automatic action detection in demonstrations.

Basic information

The project is supported by the Czech Science Foundation (GACR) under Project no. 21-31000S.The principal investigator is Dr. Karla Stepanova (E-mail)

The duration of the project is 7/2021 - 6/2024.

Architecture overview