Imitrob project

IMITATION LEARNING SUPPORTED BY LANGUAGE FOR INDUSTRIAL ROBOTICS

FUNDED BY TA CR ZETA, 2017-2019

ABOUT PROJECT

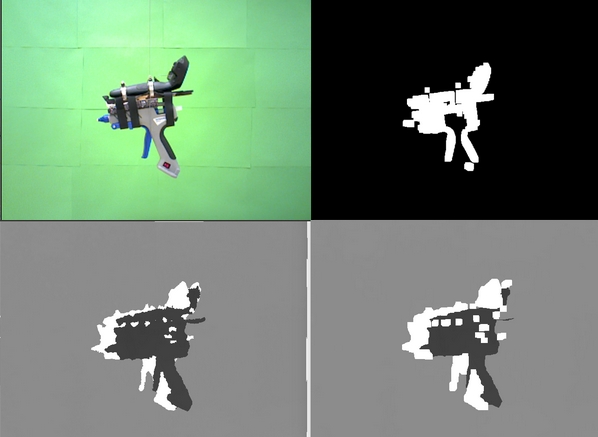

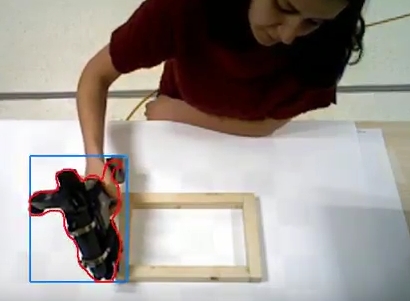

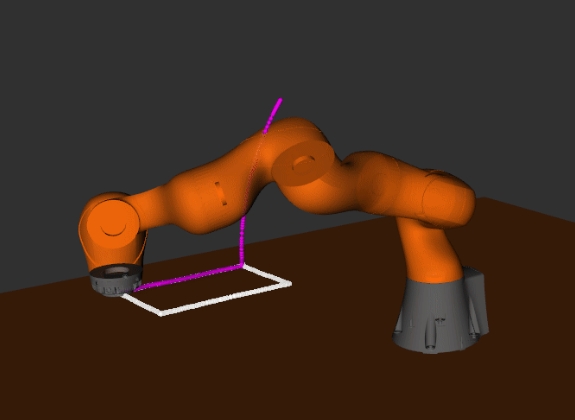

We consider the problem of visual imitation learning from human demonstration, where we wish to learn a 6 degree of freedom (DOF) trajectory of an object from RGBD video capturing a human performing a complex object manipulation task. Reliably estimating and tracking the 6DOF position of the object remains a key open challenge as objects often lack distinctive texture, have symmetries, and are occluded by human body.