Crow project

COLLABORATIVE ROBOTICS WORKPLACE OF THE FUTURE

FUNDED BY TRIO MPO, 2019-2021

About project

One of the key goals of collaborative robotics is sharing a workspace between robot and a human operator. The shared

workspace is used by both human and robot to complete a common task – either in parallel or as a part of active

collaboration.

In both cases, the robot should not require separation from the human operator by a protective barrier (e.g., a metal

cage). An important aspect of a collaborative robot is the understanding of the scene (i.e. where is the human and what

and where are the objects on the scene/workspace) and the ability to react to the immediate needs of the operator (e.g.,

need for a specific tool). Additionally, the robot should be able to predict the future steps in the production process.

To achieve these goals, these steps are to be undertaken as a part of this project:

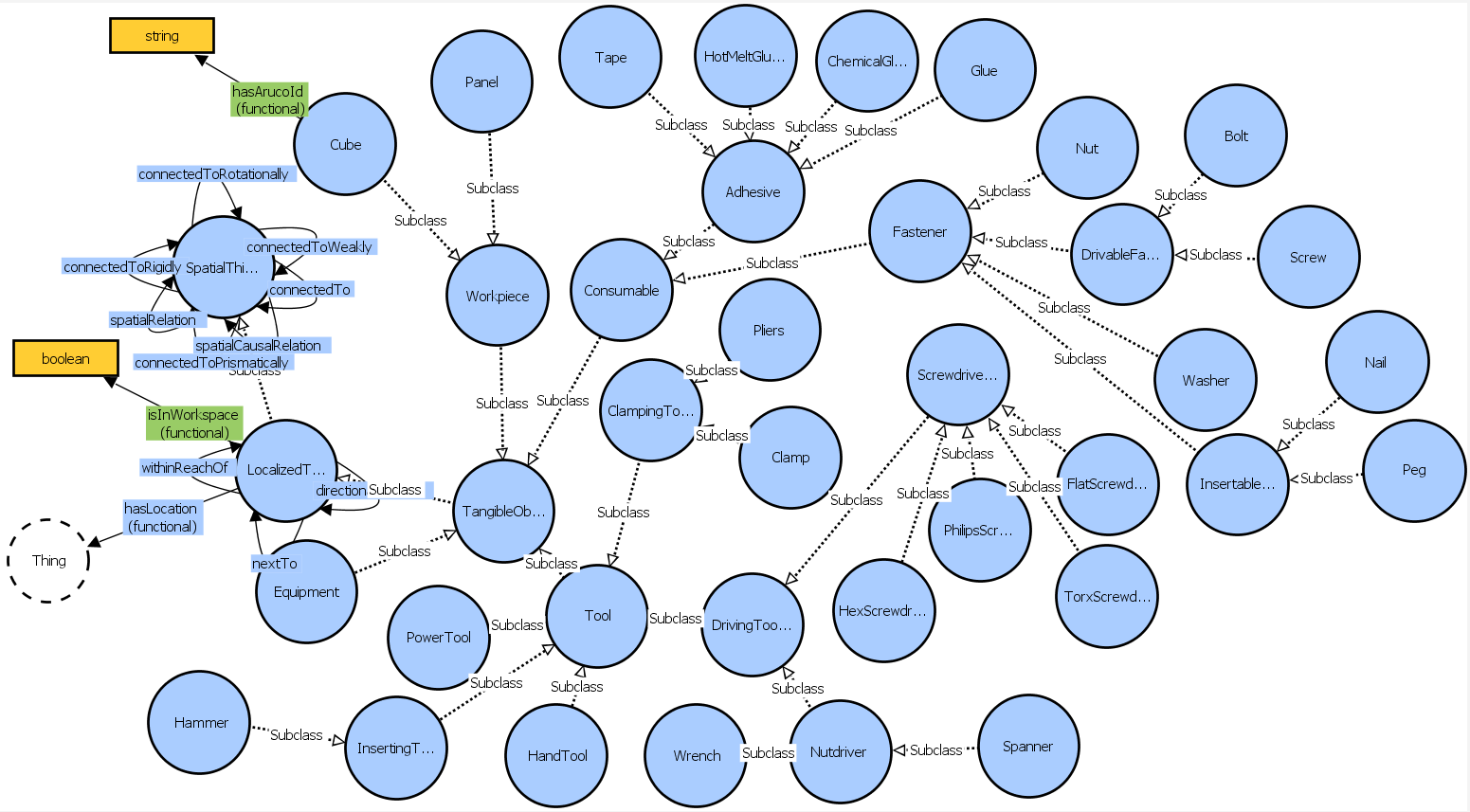

- Design and development of a device capable of scanning and reconstructing objects on the scene and geometrical relationships between the objects. Similar devices exist on the market, however, most of them of insufficient accuracy.

- Research and development of tools for efficient bi-directional communication with a human operator. The tools should allow the human to easily describe the performed task or issue orders to the robot, using natural language. The robot should be able to communicate its state or intents (e.g. confirmation of orders). The communication interface should be easy to learn and use.

- Development of a system capable of storing and interconnecting data acquired from individual sensors or other channels (visual, tactile, language, etc.). The system should be also capable of providing predictions about future states of the world (bounded by the workspace) and estimate the needs of the operator in advance.

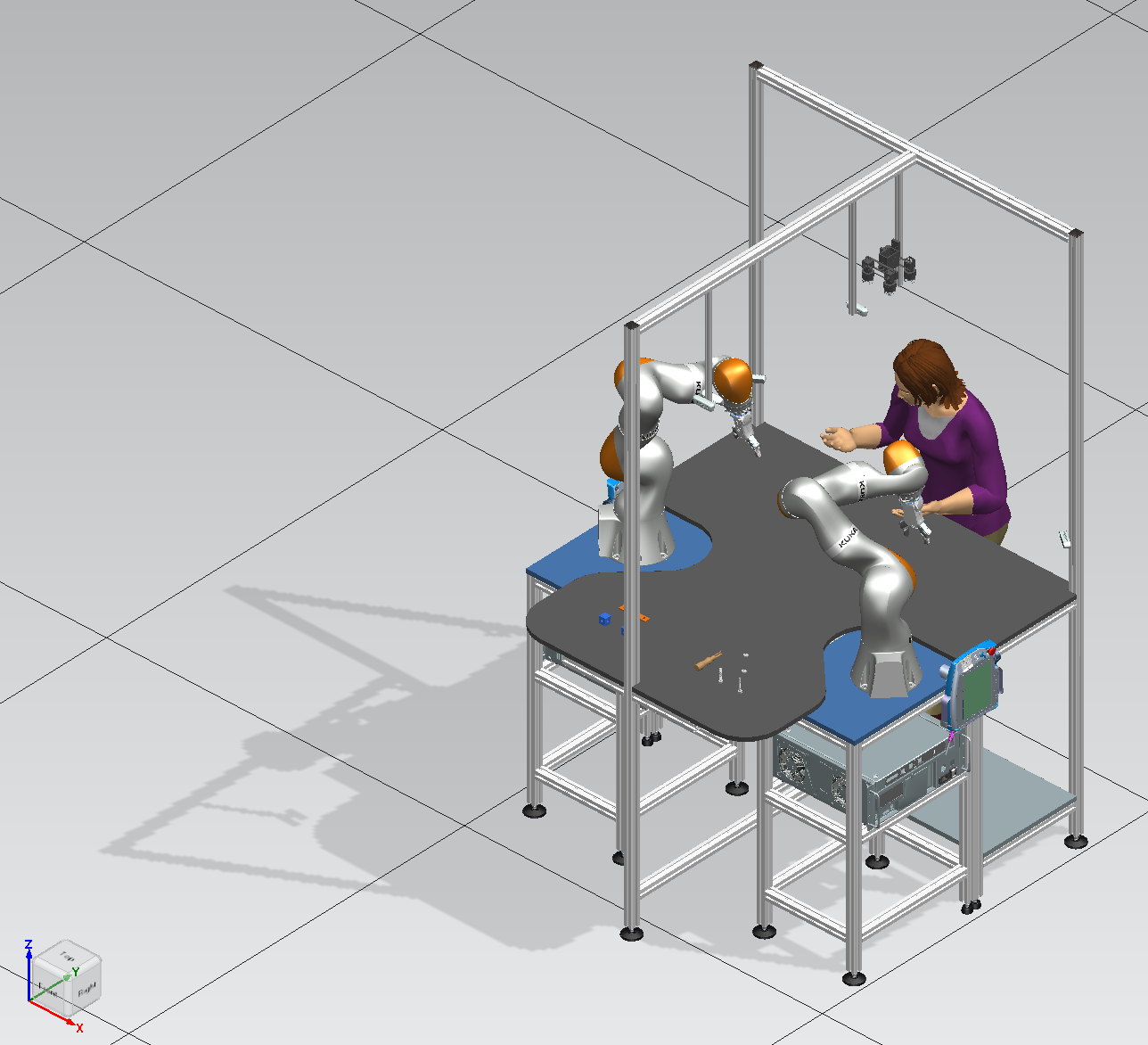

- Design a workspace with a collaborative robot. The workspace should support efficient collaboration between the human operator and the robot.

The aforementioned steps shall be tested on the following scenarios of increasing complexity:

- Scenario 1a:

-

Finding and fetching of an object based on a simple command (e.g., “Pass me a screwdriver”)

- Scenario 1b:

-

Finding and fetching of an object based on a complex command (e.g., “Take the phillips screwdriver next to the blue box and place it onto the table”)

- Scenario 2a_

-

The ability to classify observed actions and reacting to them (e.g., the human operator picking up a screw, which is recognized by the robot, thus the robot fetches a screwdriver)

- Scenario 2b:

The ability to classify observed task (composite actions) and act on it by predicting the following steps and helping the human operator accordingly (e.g., the robot recognizes that the human is assembling a gearbox and will require a screwdriver next; the robot will find and fetch the screwdriver and prepare it so that the operator can use it quickly)

- Scenario 3a:

The robot can be queried to perform a task in parallel and prepare the resulting object (e.g, “Give me an assembled piece 6” - robot assembles the requested object from multiple parts and passes it to the human, preferably at the appropriate moment)

- Scenario 3b:

Robot predicting future actions of the human and preparing the objects based on the prediction (similar to previous scenario but without the need for a query from the operator)