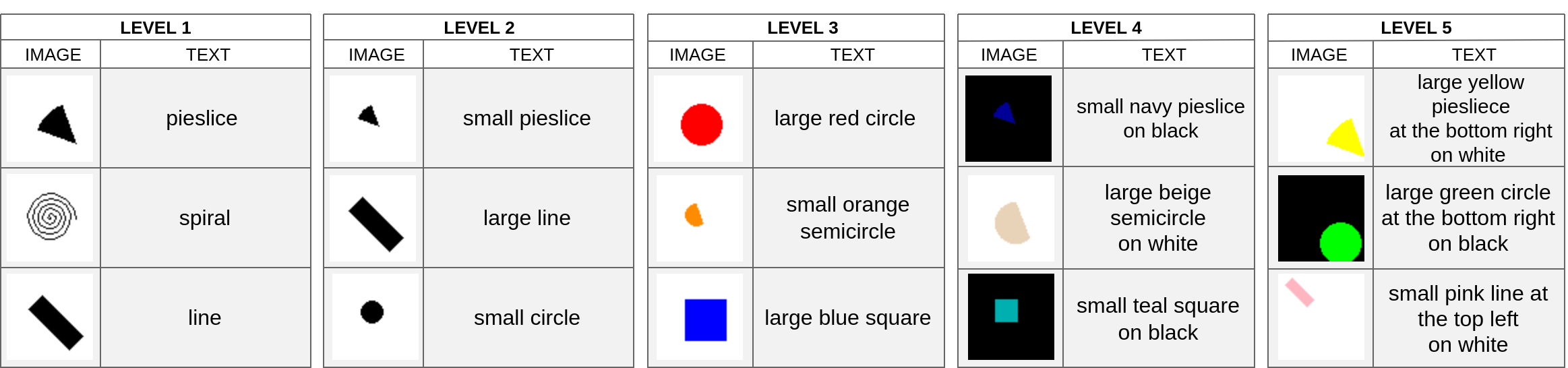

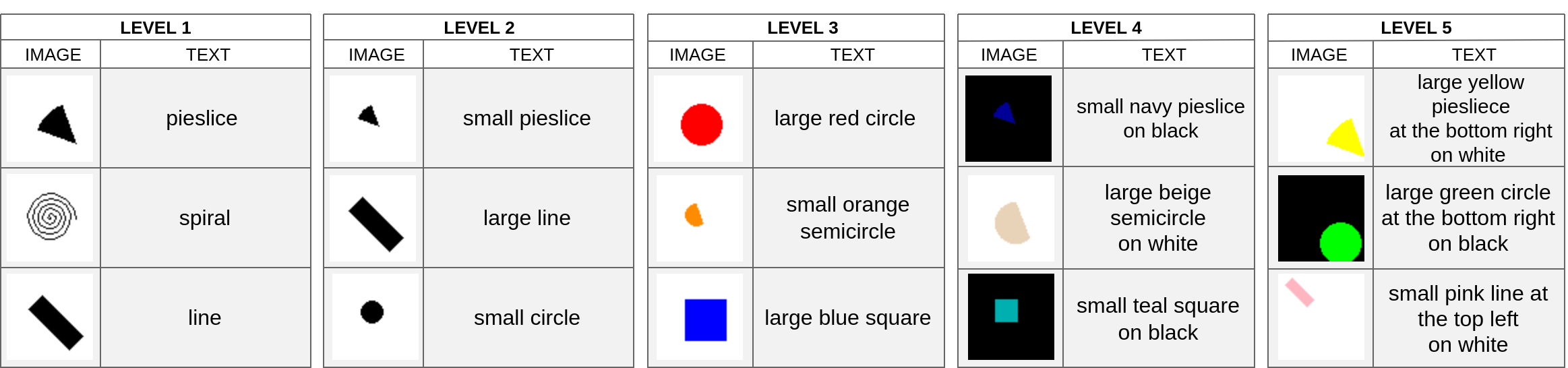

GeBiD dataset

We provide a bimodal image-text dataset GeBiD (Geometric shapes Bimodal Dataset) for systematic multimodal VAE comparison. There are 5 difficulty levels based on the number of featured attributes (shape, size, color, position and background color). You can either generate the dataset on your own, or download a ready-to-go version.

Dataset and code: GeBiD dataset and benchmark Github

Connected publication: Benchmarking Multimodal Variational Autoencoders: GeBiD Dataset and Toolkit.

Dataset datasheet: Download .pdf GeBiD datasheet file

Benchmarking multimodal VAE on the dataset

The dataset is accompanied by a toolkit that offers a systematic and unified way to train, evaluate and compare the state-of-the-art multimodal variational autoencoders. The toolkit can be used with arbitrary datasets and both uni/multimodal settings. By default, we provide implementations of the MVAE (paper), MMVAE (paper), MoPoE (paper) and DMVAE (paper) models, but anyone is free to contribute with their own implementation.

All the available code to generate, download or process the dataset together with the option to benchmark your algorithm on this dataset is available here: GeBiD Github.

Licensing

The newly provided datasets and benchmarks are copyrighted by us and published under the CC BY-NC-SA 4.0 license.

To use the code or the dataset, please, give us an attribute, using the following citation:

Erratum

The main changes to the dataset and code are listed here. If you found any issue with the dataset, please let us know so we can fix it (

karla.stepanova@cvut.cz):

Usage of the dataset

Please, if you used/are willing to use our dataset, let us know so we can list you here. It will also make us happy and feel that our effort to prepare the dataset was useful.

Any contributions to the dataset are also welcomed!

Contact: karla.stepanova@cvut.cz

The dataset has been used in the following works: